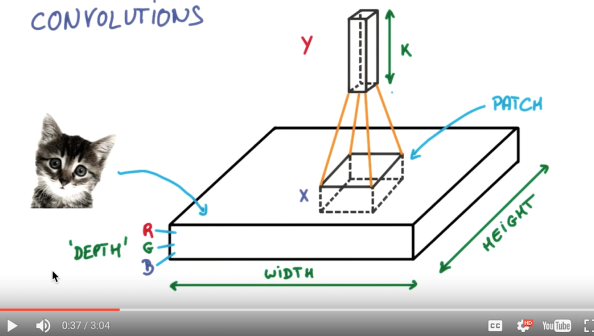

Convolutional Neural Networks,CNN 也是一种前馈神经网络,其特点是每层的神经元节点只响应前一层局部区域范围内的神经元(全连接网络中每个神经元节点响应前一层的全部节点)

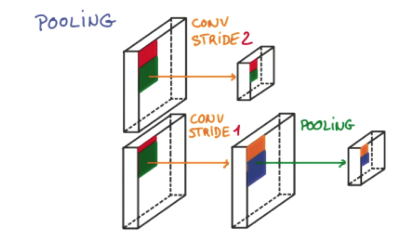

pooling

研究发现, 在每一次卷积的时候, 神经层可能会无意地丢失一些信息. 这时, pooling 就可以很好地解决这一问题. 而且池化是一个筛选过滤的过程, 能将 layer 中有用的信息筛选出来, 给下一个层分析.

同时也减轻了神经网络的计算负担. 也就是说在卷集的时候, 我们不压缩长宽, 尽量保留更多信息, 压缩的工作就交给池化了,这样的一项附加工作能够很有效的提高准确性. 有了这些技术,我们就可以搭建一个 CNN.

1 | import numpy as np |

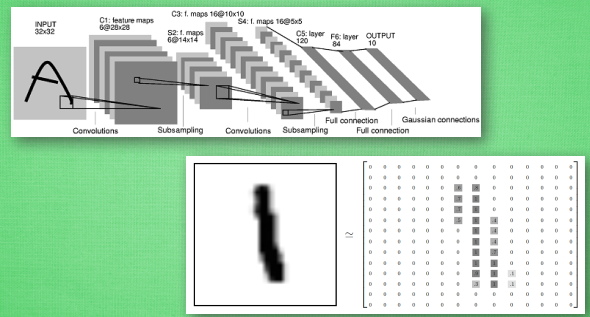

数据集 MNIST

1 | # download the mnist to the path '~/.keras/datasets/' if it is the first time to be called |

1. data pre-processing

1 | X_train = X_train.reshape(-1, 1,28, 28)/255. |

2. build model

1 | # Another way to build your CNN |

再添加第二, 卷积层和池化层

1 | # Conv layer 2 output shape (64, 14, 14) |

Fully connected layer 1 input shape (64 * 7 * 7) = (3136), output shape (1024)

1 | model.add(Flatten()) |

Fully connected layer 2 to shape (10) for 10 classes

1 | model.add(Dense(10)) |

define your optimizer

1 | # Another way to define your optimizer |

keras.layers.Conv1D 1D 卷积层 (例如时序卷积)

Keras Convolution1D与Convolution2D区别

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_3 (Conv2D) (None, 32, 28, 28) 832

_________________________________________________________________

activation_5 (Activation) (None, 32, 28, 28) 0

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 32, 14, 14) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 64, 14, 14) 51264

_________________________________________________________________

activation_6 (Activation) (None, 64, 14, 14) 0

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 64, 7, 7) 0

_________________________________________________________________

flatten_2 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_3 (Dense) (None, 1024) 3212288

_________________________________________________________________

activation_7 (Activation) (None, 1024) 0

_________________________________________________________________

dense_4 (Dense) (None, 10) 10250

_________________________________________________________________

activation_8 (Activation) (None, 10) 0

=================================================================

Total params: 3,274,634

Trainable params: 3,274,634

Non-trainable params: 0

_________________________________________________________________

3. compile model

设置adam优化方法,loss函数, metrics方法来观察输出结果

1 | # We add metrics to get more results you want to see |

4. train model

1 | print('Training ------------') |

5. evaluate model

1 | print('\nTesting ------------') |

Checking if Disqus is accessible...